In parts one and two of this blog series, we discussed VP9 and the implementation of scalability. In this last installment, we will cover the codec itself and the benefits of Vidyo’s new implementation of scalable VP9.

Read more – Improving VP9 Without Changing It – Part 1

Read more – Improving VP9 Without Changing It – Part 2

The asymmetry between encoder and decoder complexity goes back to the very first digital video encoder designs of the late 1980’s (H.261) and it’s an inherent feature of the predictive, motion-compensated hybrid structures that have historically been used. The sophistication of the encoder has always been a point of differentiation between codec implementations, with many companies developing their own “secret sauce” for improving quality and compression efficiency.

This is often a point of confusion for non-experts. Video coding standards standardize the decoding process and the bitstream syntax, not the encoding process. Implementers have tremendous freedom in optimizing the encoding process. It is interesting that in the MPEG-2 codec that is used in DVDs, encoder performance nearly doubled (i.e., half the bitrate for the same quality) in a period of 10 years with no change in the underlying bitstream syntax. It was just that the encoders were able to make smarter decisions on where to spend their bits. That’s both because engineers made better designs, but also because more processing power was becoming available to perform these calculations, even in real time.

Vidyo recently launched its own Platform-as-a-Service (PaaS) offering, in vidyo.io. As a PaaS, it is designed to be easily available to all – one just needs to download the freely available SDK for their programming language and environment, and connect the code to their own application. The service is cloud-based and charged based on usage. You can read about the engineering behind vidyo.io in my blog post.

It was important for us to be able to offer a codec that would provide full support for scalability, be free of currently known royalty requirements, and offer native interoperability with WebRTC. While we could have used the open source version of scalable VP9 available in the WebM project, we decided to develop a scalable VP9 implementation from scratch.

Vidyo has always placed heavy emphasis on a high quality experience and performance across the widest possible range of platforms, from mobile to high-end 5K systems and beyond. Over the years we have developed a lot of expertise in designing video encoders and developing optimized implementations on various platforms, especially designed with real-time interactive communications in mind. We also wanted to be able to use the error resilience and error concealment mechanisms that we have built into our platform, some of which are patented, and go way beyond what’s available in open source projects, including WebRTC.

Of particular importance for us is mobile performance. Since encoding is done using software, it is imperative to squeeze as much performance as possible from the available CPU and battery. In fact, as we show below (you can look ahead in Table 1), even in its simplest operating mode (referred to as CPU mode 8), in our testing the open source version of VP9 (referred to as OVP9) did not deliver real-time-suitable performance for spatially scalable coding of 720p content in typical mobile platforms. The frame rates were around 15 fps, half of the typically required 30 fps. This was a key motivation for us to develop our own optimized version.

The results of our work are now available on our vidyo.io PaaS, and will soon be available across the entire Vidyo platform. The performance improvement is been impressive. To demonstrate it, we need to compare the two codecs across three parameters: quality vs. bit rate vs. complexity. All three parameters affect one another, so to make a proper comparison we need to fix at least one of them. Here we will match the codecs’ quality across their operating range, and compare their complexity. The following show aspects of our internal testing under specific conditions; obviously other tests or real-life results may vary, but these results demonstrate the kind of performance benefits our new VP9 codec can bring.

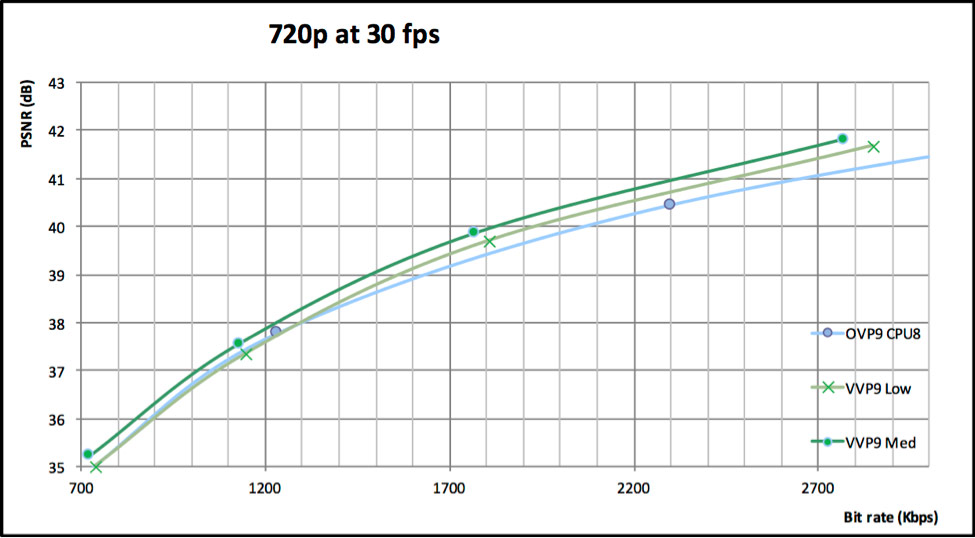

Figure 6: Performance comparison of different versions of VP9 for a 720p video using 2 spatial layers and 3 temporal layers

Figure 6 shows one example of encoding a 720p, 30 fps sequence with 2 spatial layers and 3 temporal layers using the two versions of the VP9 codec. The graph shows PSNR (Peak Signal-to-Noise Ratio) vs. bit rate. (PSNR is a measure of video quality, the higher the better.) The numbers refer to the top layer PSNR and the total bit rate. First, the video is encoded (using a fixed quantizer) at four different operating points using OVP9 at CPU mode 8. As we mentioned earlier, this is the simplest operating mode of this particular software implementation, i.e., it uses the least amount of CPU resources and, of course, provides the lowest compression efficiency. But even at that mode OVP9 did not provide real-time performance (i.e., 30 fps) for 2 scalable layers at 720p. The same video has also been encoded using Vidyo’s implementation of VP9 (referred to here as VVP9) in two different complexity modes: low and medium. We can see that VVP9 in the low mode offers about the same or slightly better performance than OVP9, while in the medium mode you get an additional improvement.

Figure 6 tells us that in this configuration we have matched the two codecs’ quality performance. The key comparison now, however, is to see at what processing cost we get this compression efficiency. That’s where things get exciting. Table 1 below shows the performance of the two codecs in terms of frames-per-second in two popular mobile platforms, an iPhone 6 Plus and a Samsung Galaxy S6 Edge+ (both in single thread mode).

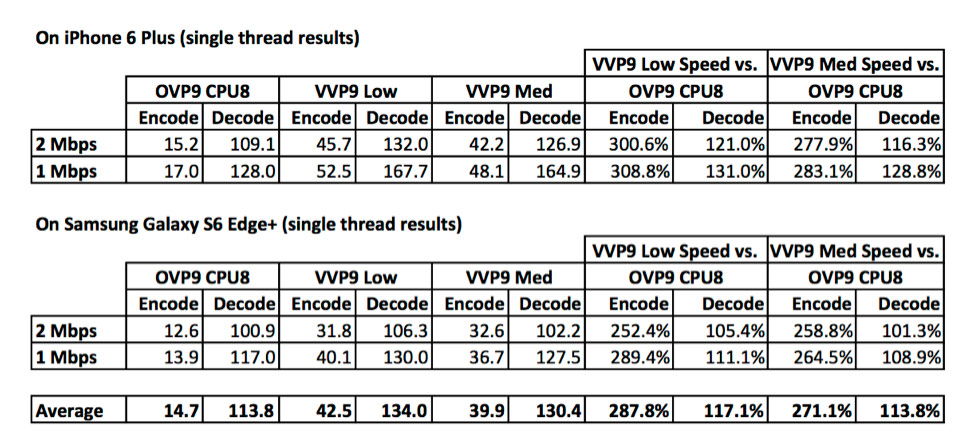

Table 1: CPU performance in iOS and Android platforms

The left-side columns show absolute fps, whereas the right-side columns show the percentage of OVP9 encoding and decoding speed achieved by VVP9 (in the two modes). We can see that, on average, across the two devices and bit rates we got a 2.7-2.8x speed-up when encoding, while producing even slightly better video quality (PSNR). In the iPhone’s case VVP9 at the low mode provided more than triple the speed. We should emphasize that these results refer to scalable encoding, with two spatial layers. Even in single layer mode, however, we saw speed-ups of up to 1.7x. As shown above, decoding speeds were also faster, though not as significantly. This is expected, since the decoding process is much simpler and there is less room for optimization.

The significantly increased encoding speed in particular has a direct implication to battery life on mobile devices. A higher encoding speed, as measured in fps, means that the device’s CPU requires less time to encode each video frame. If the encoding rate is below 30 fps, as it was with the OVP9 test results, this means that even if the CPU spends all its time encoding video, it still cannot keep up with a typical camera’s 30 fps. If the encoding rate is well above 30 fps, as it was with our VVP9 results, this means that the CPU has enough time to encode a video, and then deal with other application tasks or just go to sleep. This immediately translates to lower battery usage. The smaller improvement in decoding speed has a similar but correspondingly less significant power consumption benefit. The total power consumption improvement depends also on the other tasks that the video communication application has to do, including audio encoding and decoding, UI management, etc. In mobile devices dedicated to video encoding (e.g., body cameras worn by first responders), the savings can easily translate to double the battery life.

Some of these improvements are also present in desktop applications. For example in a 64-bit Windows environment we saw a roughly 1.6-1.7x speed-up for VVP9 over OVP9 when encoding the same 720p content. (In desktop environments, decoding speed comparisons are less relevant, since the decoding speed in both codecs is already several times faster than real-time.) Obviously battery and processor resources don’t tend to be as limited in desktops as in mobile devices, but the encoding performance gains can still result in freed-up processor time for other tasks and/or longer battery life for laptops.

The net result for Vidyo end users is that they can enjoy all the benefits of an open standard with full compatibility with existing browser-based implementation, or really any VP9 implementation, while at the same time enjoying substantially better performance and significantly extending the ability of their mobile devices to encode video before needing to be plugged-in. This is one of the great strengths of open standards: allowing different implementations that can all interoperate.

Read more:

Improving VP9 Without Changing It – Part 1

Improving VP9 Without Changing It – Part 2